Lambda Calculus and Lisp, part 1

The first of a series of envisioned blog posts on lambda calculus, and Lisp. It’s unclear exactly where to start: there is a whole heap of interesting issues, both theoretical and in terms of concrete implementations, which tangle and interconnect.

A particular application of lambda calculus is a very salient part of my “day job” as a formal semanticist of natural language. And my interests in Emacs and lisp(s) feel like they tie in here as well—though that’s a question in itself which is probably as good of a starting point into this (planned) series of posts as any.

There is much to explore: origins of John McCarthy‘s Lisp and Alonzo Church‘s lambda calculus; encodings of the simple made complex by restriction to a limited set of tools; recursion, fixed points, and paradoxes; infinities, philosophy, and engineering. But much of this requires stage setting.

And finding an exact entry point is yet tricky. But perhaps we start with λ: the divining rod, the wizard’s crooked staff, as it is the key component of much magic of a sort.

Lisp: LAMBDA the Ultimate? #

We’ll start on the programming side, before turning to more

philosophical or mathematical abstractions, with lambda.

It is now not only Lisps which contain lambda as a keyword, many/most

programming languages have lambda as a keyword, usually for the

introduction of an anonymous function. That is, an unnamed function,

sometimes for one-off use.

But in McCarthy’s original formulation of LISP in 1958, LAMBDA

was used as the basis for the implementation of functions generally:

Let

fbe an expression that stands for a function of two integer variables. It should make sense to writef(3, 4) and the value of this expression should be determined. The expressiony^2 + xdoes not meet this requirement;y^2 + x(3, 4)is not a conventional notation, and if we attempted to define it we would be uncertain whether its value would turn out to be 13 or 19. Church calls an expression likey2 + x, a form. A form can be converted into a function if we can determine the correspondence between the variables occurring in the form and the ordered list of arguments of the desired function. This is accomplished by Church’s λ-notation. [p.6]….

{λ[[x_1;…; x_n]; 𝓔]}∗is(LAMBDA, (x∗_1,…, x∗_n), 𝓔∗). [p.16][McCarthy 1960:6, 161]

As in lambda calculus, LAMBDA binds variables, and replaces any

occurrences of them in the scope of the operator with whatever it

receives as arguments.

So the expression:

(LAMBDA (x y z) (+ (* y x) (* z x)))

if given the arguments 5, 2, 3, would replace x's with 5; y's with 2,

and z's with 3:

(+ (* 2 5) (* 3 5)) ;; = (+ 10 15) = 25

The illusion of a blue-suffused platonic universe of car's #

The origin of lambda keywords in LISP, and the origin of LISP’s LAMBDA

in lambda calculus has suggested the idea that LISP was something like

an implementation of lambda calculus as a programming language, and

the certain mysticism2 attaching to both suggests perhaps a tighter

surface association than there is direct evidence for.

![Figure 1: xkcd 224 [see https://www.explainxkcd.com/wiki/index.php/224:_Lisp]](https://imgs.xkcd.com/comics/lisp.jpg)

Figure 1: xkcd 224 [see https://www.explainxkcd.com/wiki/index.php/224:_Lisp]

This is the topic of a 2019 blog post based on his talk for the Heart of Clojure conference in Daniel Szmulewicz expands on the theme “Lisp is not a realization of the Lambda Calculus”.3 One of the points Szmulewicz draws attention to is McCarthy’s own words:

…one of the myths concerning LISP that people think up or invent for themselves becomes apparent, and that is that LISP is somehow a realization of the lambda calculus, or that was the intention. The truth is that I didn’t understand the lambda calculus, really.

[McCarthy 1978b:1904]

In the paper version of the talk, McCarthy makes a similar point, but it’s worth looking at it in the larger context:

…how do you talk about the sum of the derivatives, and in programming it, there were clearly two kinds of programs that could be written.

One is where you have a sum of a fixed number of terms, like just two, where you regard a sum as a binary operation. And then you could write down the formula easy enough. But the other was where you have a sum of an indefinite number of terms, and you’d really like to make that work too. To make that work, what you want to be able to talk about is doing the same operation on all the elements of a lit. You want to be able to get a new list whose elements are obtained from the old list by just taking each element and doing a certain operation to it.

In order to describe that, one has to have a notation for functions. So one could write this function called

mapcar. This says, “Apply the functionfto all the elements of the list.” If the list is null then you’re going to get aNILhere. Otherwise you are going to apply the function to the first element of the list and put that onto a front of a list which is obtained by doing the same operation again to the rest of the list. So that’smapcar. It wasn’t calledmapcarthen. It was calledmaplist, butmaplistis something different, which I will describe in just a moment.That was fine for that recursive definition of applying a function to everything on the list. No new ideas were required. But then, how do you write these functions?

And so, the way in which to do that was to borrow from Church’s Lambda Calculus, to borrow the lambda definition. Now, having borrowed this notation, one the myths concerning LISP that people think up or invent for themselves becomes apparent, and that is that LISP is somehow a realization of the lambda calculus, or that was the intention. The truth is that I didn’t understand the lambda calculus, really. In particular, I didn’t understand that you really could do conditional expressions in recursion in some sense in the pure lambda calculus. So, it wasn’t an attempt to make the lambda calculus practical, although if someone had started out with that intention, he might have ended up with something like LISP.

[McCarthy 1978a:189–1905]

The Discovery of LISP #

Two bits from the end of this I want to highlight. The first, well, it’ll come up in future posts, and probably later in this post itself, and it has to do with recursion:

“I didn’t understand that you really could do conditional expressions in recursion in some sense in the pure lambda calculus”

And the second is that McCarthy hedges his “LISP as a realisation of the lambda calculus is myth” stance slightly:

“So, it wasn’t an attempt to make the lambda calculus practical, although if someone had started out with that intention, he might have ended up with something like LISP."

This does fit rather well with (and perhaps suggested) the framing that Paul Graham does of McCarthy as the “discoverer” of Lisp — like Euclid of geometry — rather than its inventor in his “The Roots of Lisp” paper:

In 1960, John McCarthy published a remarkable paper in which he did for programming something like what Euclid did for geometry. He showed how, given a handful of simple operators and a notation for functions, you can build a whole programming language. He called this language Lisp, for “List Processing,” because one of his key ideas was to use a simple data structure called a list for both code and data.

It’s worth understanding what McCarthy discovered, not just as a landmark in the history of computers, but as a model for what programming is tending to become in our own time. …. In this article I’m going to try to explain in the simplest possible terms what McCarthy discovered. The point is not just to learn about an interesting theoretical result someone figured out forty years ago, but to show where languages are heading. The unusual thing about Lisp—in fact, the defining quality of Lisp—is that it can be written in itself.

[Graham 2002:16 (emphasis mine)]

Graham’s paper itself, as well as some of Graham’s other publications/postings (e.g., talking about Lisp as a sort of “secret weapon” in comparison to “blub languages”)7 is perhaps another contributor to the mystique of Lisp. (With the flip side of “Lisp as the Cleveriest Hacker’s Secret Weapon” enchanted coin being “the Curse of Lisp”8.)

There are other components of the history of LISP, which are suggestive of discovery rather than invention. McCarthy’s initial aim for LISP was more akin that of the Turing Machine: as a formal abstraction describing a mathematical model whose components were simple and few but yet was capable of performing any (and all) arbitrary computational operation:

One mathematical consideration that influenced LISP was to express programs as applicative expressions built up from variables and constants using functions. I considered it important to make these expressions obey the usual mathematical laws allowing replacement of expressions by expressions giving the same value. The motive was to allow proofs of properties of programs using ordinary mathematical methods. This is only possible to the extent that side effects can be avoided. Unfortunately, side effects are often a great convenience when computational efficiency is important, and “functions” with side effects are present in LISP. However, the so-called pure LISP is free of side effects, and Cartwright (1976) and Cartwright and McCarthy (1978) show how to represent pure LISP programs by sentences and schemata in first-order logic and prove their properties. This is an additional vindication of the striving for mathematical neatness, because it is now easier to prove that pure LISP programs meet their specifications than it is for any other programming language in extensive use. (Fans of other programming languages are challenged to write a program to concatenate lists and prove that the operation is associative.)

Another way to show that LISP was neater than Turing machines was to write a universal LISP function and show that is briefer and more comprehensible than the description of a universal Turing machine. This was the LISP function

eval[e,a], which computers the value of a LISP expressione, the second argumentabeing a list of assignments of values to variables. (ais needed to make the recursion work.) Writingevalrequired inventing a notation representing LISP functions as LISP data, and such a notation was devised for the purposes of the paper with no thought that it would be used to express LISP programs in practice. Logical completeness required that the notation used to express functions used as functional arguments be extended to provide for recursive functions, and theLABELnotation was invented by Nathaniel Rochester for that purpose. D.M.R. Park pointed out thatLABELwas logically unnecessary since the result could be achieved using onlyLAMBDA— by a construction analogous to Church’s Y-operator, albeit in a more complicated way.S.R. Russell noticed that

evalcould serve as an interpreter for LISP, promptly hand coded it, and we now had a programming language with an interpreter.[McCarthy 1978:1795]

(There’s a lot going on in this passage, and its context.

Notions of reasons to avoid side-effects (an ideal of “functional programming”, emphasised in Haskell and Clojure (another lisp)): thus the “functional” mode of lambda calculus rather than the “everything is state” mode of Turing machines and their infinitely long memory tapes.

Recursion again (which we’ll get to, repeatedly).

And Alonzo Church, the originator (discoverer?)^{see “X” below} of lambda calculus, who we’ll talk more about soon, and who was also Alan Turing’s PhD supervisor at Princeton.

But, first: let’s turn back to the topic of the concrete instantiation of Lisp on physical hardware by Stephen Russell.)

![Figure 2: cover of Byte Magazine August 1979 [full mag here]](https://babbagefiles.xyz/ox-hugo/lisp-byte_magazine-1979.jpg)

Figure 2: cover of Byte Magazine August 1979 [full mag here]

Elsewhere, McCarthy makes clear that he hadn’t thought at that point of LISP being instantiatable but as a theoretical exploration of computing at an abstract level. But, instead, the theoretical description translated fairly easily and directly to a runnable program, a LISP interpreter:

This

EVALwas written and published in the paper and Steve Russell said, “look, why don’t I program thisEVAL” … and I said to him, “ho, ho, you’re confusing theory with practice, thisEVALis intended for reading, not for computing”. But he went ahead and did it. That is, he compiled theEVALin my paper into IBM 704 machine code, fixing bugs, and then advertised this as a Lisp interpreter, which it certainly was. So at that point Lisp had essentially the form that it has today…[McCarthy 1974a:3079]

“No compute. Only read.” “Hell, EVAL that.” #

This is the EVAL code “in the paper” referred to above:

eval[e; a] = [

atom [e] → assoc [e; a];

atom [car [e]] → [

eq [car [e]; QUOTE] → cadr [e];

eq [car [e]; ATOM] → atom [eval [cadr [e]; a]];

eq [car [e]; EQ] → [eval [cadr [e]; a] = eval [caddr [e]; a]];

eq [car [e]; COND] → evcon [cdr [e]; a];

eq [car [e]; CAR] → car [eval [cadr [e]; a]];

eq [car [e]; CDR] → cdr [eval [cadr [e]; a]];

eq [car [e]; CONS] → cons [eval [cadr [e]; a]; eval [caddr [e]; a]];

T → eval [cons [assoc [car [e]; a]; evlis [cdr [e]; a]]; a]];

eq [caar [e]; LABEL] → eval [cons [caddar [e]; cdr [e]];

cons [list [cadar [e]; car [e]; a]]];

eq [caar [e]; LAMBDA] → eval [caddar [e];

append [pair [cadar [e];

evlis [cdr [e]; a]; a]]]

evcon[c; a] = [eval[caar[c]; a] → eval[cadar[c]; a]; T → evcon[cdr[c]; a]]

evlis[m; a] = [null[m] → NIL; T → cons[eval[car[m]; a]; evlis[cdr[m]; a]]]

Translated into slightly more familiar Lisp style (with added

named-function-making label's), it is:10

(label eval

(lambda (e a)

(cond

((atom e) (assoc e a))

((atom (car e))

(cond

((eq (car e) 'quote) (cadr e))

((eq (car e) 'atom) (atom (eval (cadr e) a)))

((eq (car e) 'eq) (eq (eval (cadr e) a)

(eval (caddr e) a)))

((eq (car e) 'car) (car (eval (cadr e) a)))

((eq (car e) 'cons) (cons (eval (cadr e) a)

(eval (caddr e) a)))

((eq (car e) 'cond) (evcon (cdr e) a))

('t (eval (cons (assoc (car e) a)

(cdr e))

a))))

((eq (caar e) 'label) (eval (cons (caddar e) (cdr e))

(cons (list (cadar e) (car e)) a)))

((eq (caar e) 'lambda) (eval (caddar e)

(append (pair (cadar e)

(evlis (cdr e) a))

a))))))

(label evcon

(lambda (c a)

(cond ((eval (caar c) a)

(eval (cadar c) a))

('t

(evcon (cdr c) a)))))

(label evlis

(lambda (m a)

(cond ((null m) '())

('t (cons (eval (car m) a)

(evlis (cdr m) a))))))

The above, given the few additional definitions for convenience immediately following, is a full LISP interpreter.11

(label null

(lambda (x)

(eq x '())))

(label and

(lambda (x y)

(cond (x (cond (y 't) ('t '())))

('t '()))))

(label not

(lambda (x)

(cond (x '())

('t 't))))

(label append

(lambda (x y)

(cond ((null x) y)

('t (cons (car x)

(append (cdr x) y))))))

(label pair

(lambda (x y)

(cond ((and (null x) (null y)) '())

((and (not (atom x)) (not (atom y)))

(cons (list (car x) (car y))

(pair (cdr x) (cdr y)))))))

(label assoc

(lambda (x y)

(cond ((eq (caar y) x) (cadar y))

('t (assoc x (cdr y))))))

The brevity of the code combined with the details of the story of the

implementation: seemingly the theoretical code works without

translation, suggesting a sort of natural discovery. But, the nature

of even the theoretical, pre-implementation code did not exist in some

Platonic heaven of mathematics, as can be seen by the nature of some

of the operations, particularly car and cdr (often in modern Lisps,

especially Scheme and Scheme-influenced Lisps, rendered instead more

transparently as first and rest.)

The Non-Platonic mechanics of 1950s IBM mainframes #

LISP was designed with the IBM 704-style architecture in mind, and car

and cdr make some reference to particular details of the hardware,

where the IBM 704 had “address” and “decrement” fields in memory index

registers (≈locations in physical RAM), and car referenced the

“address” field of the register (so CAR = “C-ontents of the A-ddress

part of the R-egister”) and cdr the “decrement” field of the register

(CDR = “C-ontents of the D-ecrement part of the R-egister”).12

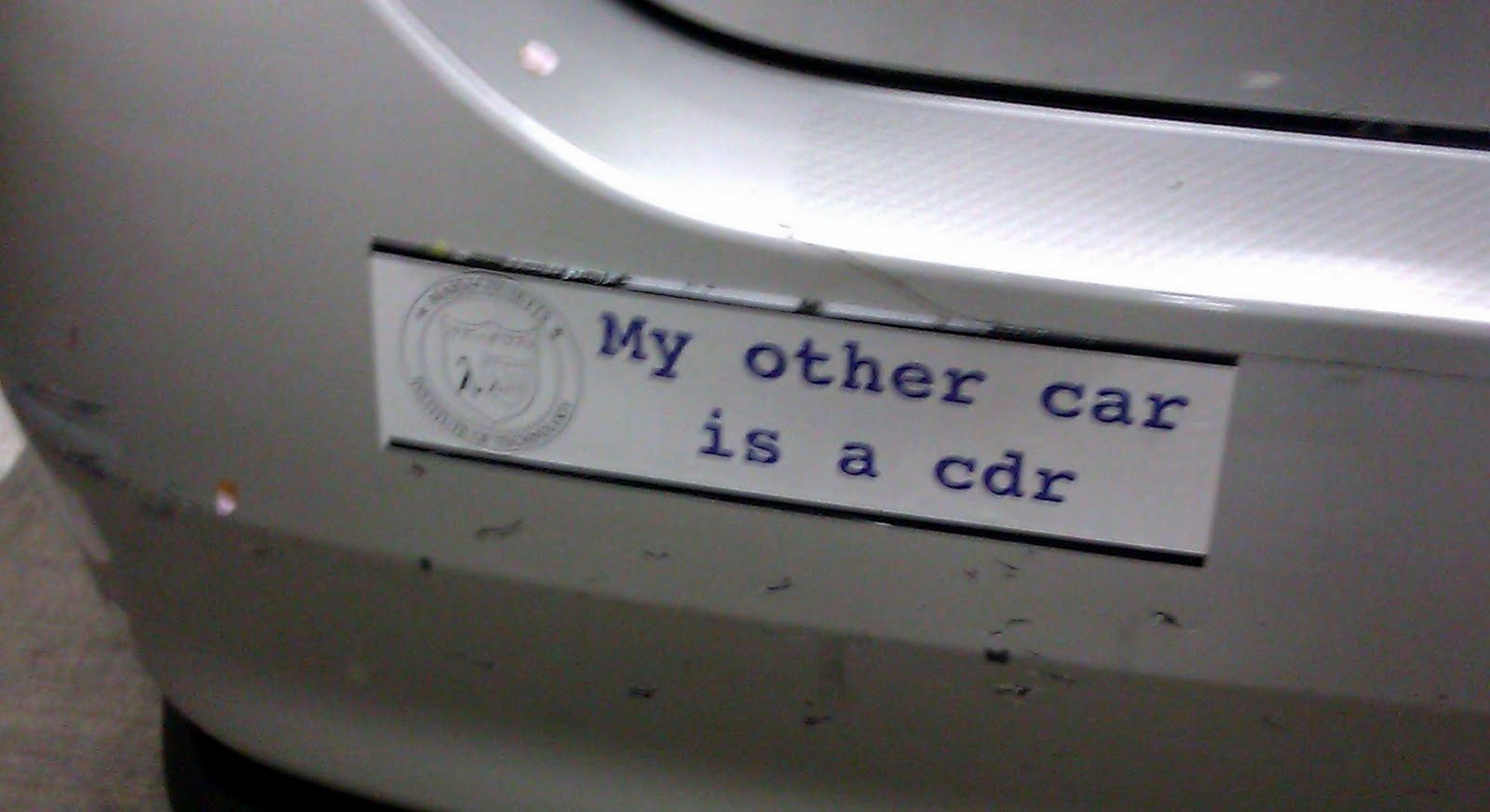

Figure 3: my other car is a cdr

Lists in Lisp are singly-linked lists, with each node in the list

having a “value” field and a “next” field, which points to the next

node; these “value” and “next” fields correspond to the car and

cdr operations. So, if we have a list like (a b c), the first node in the list has a

“value” field of a and a “next” field pointing at another node, with

this second node actually itself being — not b — but rather the list

(b c). A proper list in Lisp is nil-terminated: that is, the last item

in the list is actually nil (which is the empty list '()).

The operation cons above (for CONstructor) is a pair-forming operation

(where the pairs correspond to the “value” and “next” fields),

returning what are variously called (in different lisps) “conses” or

“pairs”. Not all conses/pairs are lists (at least in most Lisps),

since a proper list in Lisp is nil-terminated.

The operation (cons 'a 'b) will return a cons'ed object with

essentially a single node, where the “value” field will be a and the

“next” field will be b. Lisps will usually print such non-list conses

(sometimes called “improper lists”) as “dotted lists”, i.e., (cons 'a 'b) will print out as (a . b).

The list (a b c) is actually the result of doing (cons a (cons b (cons c nil))) and would be printed in dotted-pair notation as (a . (b . (c . nil))) [which is equivalent to (a . (b . (c . ()))), since nil is

the empty list].

Unsurprisingly, a lot of early/traditional Lisp programming involves manipulations of lists. But all modern Lisps implement other types of data structures as well, including vectors/arrays, hash tables, objects, and so on.

But, on the main topic of (eq 'lisp 'lambdacalculus), others have also

pointed out the concrete hardware-connections of LISP from early days,

telling against the “Lisp-as-pure-formal-invention-discovery” or

“Lisp as (semi-)direct implementation of lambda calculus” notions:

Lisp was intended to be implemented on a computer from day 0. For their IBM 704. Actually Lisp is based on earlier programming experience. From 56 onwards John McCarthy implemented Lisp ideas in Fortran & FLPL. Then 58 the implementation of Lisp was started. 59 a first runnable version was there. 1960 there was the Lisp 1 implementation. The widely known paper on the Lisp implementation and recursive function theory was published in 1960. But his original prime motivation was not to have a notation for recursive function theory, it was to have a list processing programming language for their IBM 704 for AI research.

Lisp as designed by McCarthy was very different from lambda calculus.

[going on to point to the Stoyan (1984) Early LISP history (1956 - 1959) paper.]

There’s obviously much more to explore for the early history of Lisp and the nature of its connections to lambda calculus, but this much at least should give a general sense of the distinctions/divergences between Lisp and lambda calculus, while not ignoring important interconnections between them.

Reaching the '() of the line #

And so while it’s tempting to delve off into other interesting

features (homoiconicity!) of LISP/Lisps and their history and dialects

(the Common Lisp of Endor; Schemes, Rackets, Chickens and Guile (oh

my!), Clojure, Fennel and others), I’d wanted to get to lambda

calculus proper much earlier in this piece already, and so we’ll set

our (lispy) parens down for a moment, and trade in our LAMBDA for a λ.

Cattle-prodding functions: the lambda calculus #

The aforementioned Alonzo Church13, a Princeton mathematician who supervised 31 doctoral students during his career, influencing others important researchers (including Haskell Curry [for whom the programming language Haskell is named; as well as the operating of currying]), developed (the) lambda calculus as part of his research into the foundations of mathematics.

Lambda calculus is Turing complete, thus equivalent in computation power to a Turing machine and a universal model of computation.

There are very few bits of machinery in basic untyped lambda calculus. (A reason for which it seemed to be attractive to McCarthy as a touchstone.)

Lambda calculus has variables and lambdas; function application; a reduction operation (which may follow function application); and a convenience variable-renaming operation.

More specifically, we have:

- variables, like

x, which are characters or strings representing “a parameter” - lambda abstraction: essentially just the definition of a function,

specifying its input (by a bound variable, say,

λx) and returning an output (say,M). E.g., the expressionλx.Mwill take an input, and replace any and all instances ofxin the bodyM14 with whatever the input was. (The.separates the lambda and specification of bound variable (herex) (“input taker”) from the body (the “output”).) - function application: a representation like

(M N), the functionMapplies toN, where bothMandNare some sort of lambda terms. - β-reduction (beta reduction): bound variables inside body of the

expression are replaced by inputs “taken” by the lambda

expressions. The basic form:

((λx.M) N)→(M[x := N]). (That is, an expression(λx.M)combining with an expressionNreturns(M)where where all instances ofxinside ofMare replaced withN.)

(Setting aside rule 4 for the moment.)

For example, we might have an expression:

λf.λx.(f x)

This would be an expression which combines, one at a time, with two

inputs, the lambdas operating from left to right, and then applying

the f input to the x input.

To see this in full working order, we need to specific one of the two remaining operations (both reduction operations):

So, turning to rule 4 for β-reduction, taking our expression from above and providing it with inputs, and walking through the (two) application+β-reduction steps one at a time:

((λf.λx.(f x)) b a) =

((λx.(b x)) a) =

(b a)

That is, first, the leftmost lambda, λf, “takes” the leftmost argument b

(in “taking” an argument, it “discharges” and disappears) and the

(single) bound instance of the variable f in the body is replaced by

b. Then, the same thing happens with the remaining lambda, λx, and the

remaining argument, a. The result is a function where b applies to

a. (Though since in this case there are no more lambdas, nothing more

happens.)

Since (b a) looks somewhat unexciting/opaque, we can imagine a sort of

hybrid proper untyped lambda calculus/Lisp hybrid language — let’s

call this toy language of ours ΛΙΣΠ — and illustrate what things might

look like there (assuming here that numbers are numbers and #'* is a lispy

prefix multiplication function):

((λf.λx.λy.(f x y)) #'* 6 7) =

((λx.λy.(* x y)) 6 7) =

((λy.(* 6 y)) 7) =

(* 6 7) =

42

This isn’t how mathematics works in classical untyped lambda calculus

— because we only have the 4 rules/entities enumerated above [plus a

variable-clash reduction operation called α-reduction]15 and nothing

else16: no integers, no stipulated mathematical operations, no car or

cdr or cons or eq or anything — we can do all of these things in

lambda calculus with the tools we have, and we’ll explore that in

another post, but for now I just wanted to show you the toy ΛΙΣΠ

language snippet as I find something that feels a bit more familiar

and concrete can be helpful for understanding the notional unpinnings

of what’s going on in lambda calculus.

Ok, so there’s no integers or cars, but what’s all this about cattle prods? #

Well, why is λ / LAMBDA the “function”-making operator? Maybe just

eeny-meeny-miney-moe amongst Greek letters, but at least at one point

Church explained that [A. Church, 7 July 1964. Unpublished letter to

Harald Dickson, §2] that it came from the notation “x̂” used for

class-abstraction by Whitehead and Russell in their Principia

Mathematica (which we’re refer to later on), by first modifying “x̂” to

“^x” to (and then for better visibility to “∧x”) to distinguish

function-abstraction from class-abstraction, and then changing “∧”

(which is similar to uppercase Greek “Λ”) to (lowercase Greek) “λ” for

ease of printing (and presumably to avoid confusion with other

mathematical uses of “∧”, e.g., logical AND).17

[So maybe “x̂” ⇒ “^x” → “∧x” → “λx”.]

The Greek lambda (uppercase Λ, lowercase λ) as an ortheme itself derives ultimately from a Semitic abjad, specifically from the Phoenician lāmd 𐤋, which (like most letters began as a pictogram of sorts) is considered to originate from something like an ox-goad, i.e., a cattle prod, or else a shepherd’s crook, i.e., a pastoral staff. (The reconstructed Proto-Semitic word *lamed- means a “goad”.)18

Are We in Platonic Heaven Yet? #

Lambda calculus, not being tied to any particular hardware and being a true formula abstraction, feels like something that might have a better claim to being something like a property of the universe that one might discover (I admit I find it hard not to feel something of the sort — but then I’ve used lambda calculus for work on natural language within a framework that wants to assign some sort of reality to our formalisations, so it’s hard not to be pulled in this direction), however, Church says (of his own formalism):

We do not attach any character of uniqueness or absolute truth to any particular system of logic. The entities of formal logic are abstractions, invented because of their use in describing and systematizing facts of experience or observation, and their properties, determined in rough outline by this intended use, depend for their exact character on the arbitrary choice of the inventor.

[Alonzo Church 1932:34819]

(This seems part of Church’s constructivist philosophy, in common with Frege.)20

What’s next? : λy.(equal? (cdr L) y) #

We’ve pulled at a lot of disparate threads here, trying to explore the nature of the connections between Lisp and (the) lambda calculus. Neither the idea that Lisp is a direct instantiation of lambda calculus nor the idea that McCarthy was largely ignorant of properties of lambda calculus are quite right. But that there are an interesting interplay of connections.

But. This is really to set the stage for me to talk about things I’m interested in which draw on different aspects of Lisp(s) and lambda calculus and formal or applied applications to do with one or the other.

I was going to talk about Montague Grammar, because it’s fascinating (and it’s my day job), for which lambda calculus is a crucial component.21 But we’re at length now, so it should be another day.

What I do want to look at next is a combination of Lisp and lambda calculus, in various ways, starting with attempts to implement aspects of lambda calculus in Emacs Lisp, and the challenges therein.

[fingers crossed that (next 'blog) does not eval to undefined.]

(Update: (eval (next 'blog)): Part 2: Recursion Excursion.)

-

McCarthy, John. 1960. Recursive functions of symbolic expressions and their computation by machine. Communications of the Association for Computing Machinery 3(4):184-195. [available at the Wayback Archive; page nos. refer to those of the PDF at the link, a reformatted version in LaTeX, not those of the original publication.] ↩︎

-

On Lisp’s mystical aura, see How Lisp Became God’s Own Programming Language (and the associated Hacker News discussion). ↩︎

-

“Lisp ≠ Lambda Calculus” | Daniel Szmulewicz: Perfumed nightmare (A blog for the somnambulisp) ↩︎

-

McCarthy, John. 1978b. Transcript of presentation. History of Lisp. In History of programming languages, ed. Richard L. Wexelblat, 185–191. New York: Association for Computing Machinery. https://dl.acm.org/doi/10.1145/800025.1198361 ↩︎

-

McCarthy, John. 1978a. History of Lisp. In History of programming languages, ed. Richard L. Wexelblat, 173–185. New York: Association for Computing Machinery. https://dl.acm.org/doi/10.1145/800025.1198360 ↩︎

-

Graham, Paul. 2002. The Roots of Lisp. Ms., https://paulgraham.com/rootsoflisp.html. [PDF version] ↩︎

-

See, e.g., Graham’s 2001 “Beating the Averages”. ↩︎

-

On the “Curse of Lisp”, see for instance Rudolf Winestock’s “The Lisp Curse”; but also this 2021 discussion on r/clojure, where it’s mentioned that Winestock said in 2017 in a comment on (one of the innumerable) Hacker News threads on the topic “I wrote that essay five years ago. Nowadays, just use Clojure or Racket and ignore what I’ve written.” ↩︎

-

J. McCarthy: LISP History. Talk at MIT, Spring or Summer 1974 (Written from tape 7/10/75, unpublished: cited in Stoyan, Herbert. 1984. Early LISP history (1956 - 1959). LFP ‘84: Proceedings of the 1984 ACM Symposium on LISP and functional programming, eds. Robert S. Boyer, Edward S. Schneider, Guy L. Steele, 299–310. New York: Association for Computing Machinery. [https://doi.org/10.1145/800055.802047]) (Quote from p.307.) ↩︎

-

Descriptively:

(quote x)returns (the symbol)x(rather than the value ofx).(atom x)returnstif the value ofxis an atom or else'().(eq x y)returnstif the values ofxandyare the same atom, or both the empty list; otherwise returns().(car x)expects the value ofxto beconsexpression of some sort, like a list, and returns its first element.(cdr x)expects the value ofxto beconsexpression of some sort, like a list, and returns everything after the first element.(cons x y)returns an object composed of a link betweenxandy; this is how lists are formed, if the second argument of the innermostconsis().(cond (p₁ e₁)…(pₙ eₙ))evaluates thepexpressions in order until one returnst, at which point it returns the co-indexede.((lambda (p₁…pₙ) e) a₁…aₙ)evaluates eachaᵢexpression and replaces the occurrence of all occurrences of eachpᵢinewith the value of the correspondingaᵢexpression, and then evaluatese.(label f (lambda (p₁…pₙ) e))makes all occurrences of the symbolfbehave like the following(lambda (p₁…pₙ) e)expression, including inside ofe.

-

With just tad bits more sugar to reduce

car/cdrrecursion spam:↩︎(label caar (lambda (x) (car (car x)))) (label cadr (lambda (x) (car (cdr x)))) (label caddr (lambda (x) (car (cdr (cdr x))))) (label cadar (lambda (x) (car (cdr (car x))))) (label caddar (lambda (x) (car (cdr (cdr (car x)))))) -

See McCarthy, John, Paul W. Abrahams, Daniel J. Edwards, Timothy P. Hart, and Michael I. Levin. 1962. LISP 1.5 Programmer’s Manual. Cambridge, Mass.: M.I.T. Press [pdf], at p.36f and also McCarthy (1960:26–7), as well as the Wikipedia page on CAR and CDR. ↩︎

-

Church is also well-known for his proof that the Entscheidungsproblem is undecidable (Church’s theorem), the Church-Turing thesis, the Frege-Church ontology, amongst much else. I don’t know any particularly interesting details of his personal life (he was no Richard Montague, about whom more later); he was apparently a lifelong Presbyterian. ↩︎

-

The

Mhere can be standing in for something more complex, e.g., the whole expression might really beλx.(a (b d)), whereMhere is a place-holder for(a (b d)). ↩︎ -

The α-conversion (fifth) rule is: bound variables can be substituted with different bound variables. So,

λx.M[x]can be α-converted toλy.M[y]. Bound variable names have no significance except as place-holders, so these two expressions are equivalent. The reason for doing this is to avoid name-clashes (see Two Hard Things?) when combining expressions.E.g., if we wanted to combine

λxλy.(x y)with itself, e.g.((λxλy.(x y)) (λxλy.(x y)))(this is of the function application form(M N)), we could do α-conversion so we don’t end up with accidental variable capture.If we didn’t this would happen:

((λxλy.(x y)) (λxλy.(x y)))=((λy.((λxλy.(x y)) y))=(λy.(λy.(y y))Since variables are arbitrary names, whose point is distinctionness, we need instead to α-convert on one of the expressions:

λxλy.(x y)= (by α-conversion)λaλb.(a b)And then we can combine as we tried to before, and correctly arrive at:

((λxλy.(x y)) (λaλb.(a b)))=(λy.((λaλb.(a b)) y))=λy.(λb.(y b))(We’ll talk more about α-conversion and its implement in a later post.) ↩︎

-

Well, there’s also (optional/debated) sixth rule: η-conversion, which touches on some more philosophical concerns (though ones which will eventually concern us too), to do with extensionality (vs. intensionality) [essentionally Frege’s Sinn und Bedeutung].

η-conversion says that in a case where

(f x) = (g x)for all possible values ofx, thenf=g. It’s not always something which is implemented, and it raises questions around extensionality vs intensionality vs hyperintensionality we’ll probably touch on a later point.But, for a taste, by η-conversion (because it’s about extensionality, roughly what’s true in the world rather than conceptually), the following two expressions are identical (

Ghas an extra component involving an identity function):F = λx.xG = λx.(λy.y)xDo they conceptuality (intensionally) count as the same function,

FandG? No, perhaps, but their extensions are the same (they’re always produce the same results), and soFcounts as the α-reduction ofG. ↩︎ -

On the Church λ story, see Cardone, Felice & Hindley, J. Roger, 2006. History of Lambda-calculus and Combinatory Logic. In Gabbay and Woods (eds.), Handbook of the History of Logic, vol. 5. Elsevier. [pdf] On uses of ∧ as notation in formal theories, see here. ↩︎

-

See Wikipedia on Lamedh. ↩︎

-

Church, Alonzo. 1932. A set of postulates for the foundation of logic. Annals of mathematics 33(2):346-366. [pdf] ↩︎

-

Thanks to Dr E.J. Rogers for discussion on this point (Church’s constructivism, and the connection with Frege’s positions). ↩︎

-

And one I’ve worked on implementing in a Lisp at various points, e.g., Frege, the beginnings of a Racket-based DSL for natural language semantics. ↩︎